Alpaca is excited to announce the launch of the newest revision of our Order Management System (OMS). After several years of high volume algorithmic trading, we set out to capitalize on our experience and historical performance data to see how we could introduce major performance improvements into our order processing latency and scalability.

While our current OMS has served us very well, Alpaca is experiencing tremendous growth of both direct trading clients via our Trading API as well as new retail type clients via our Broker API partners. Performance has always been core to Alpaca’s DNA and we wanted to build a best of breed system with significant performance improvements that could also keep up with our rapid growth.

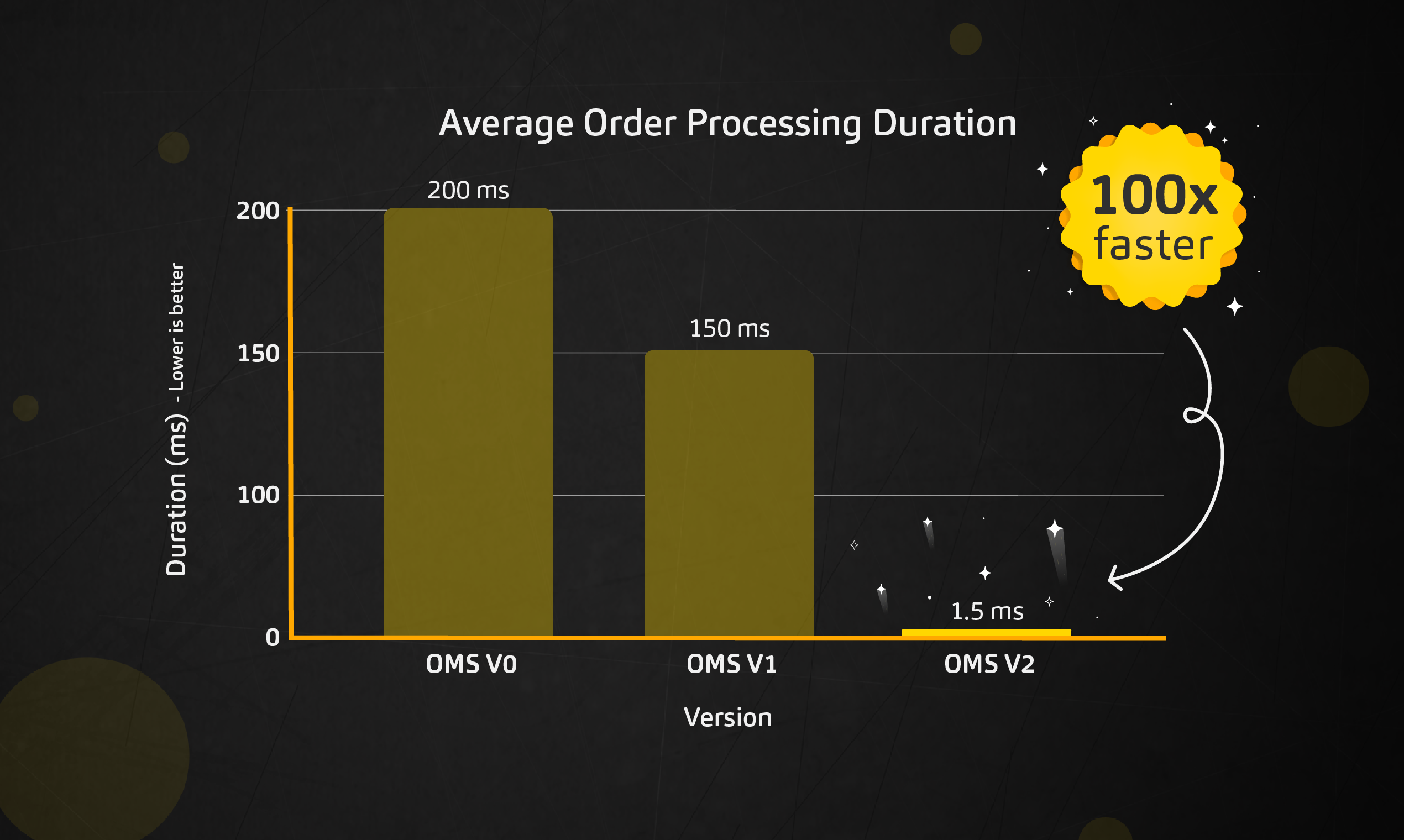

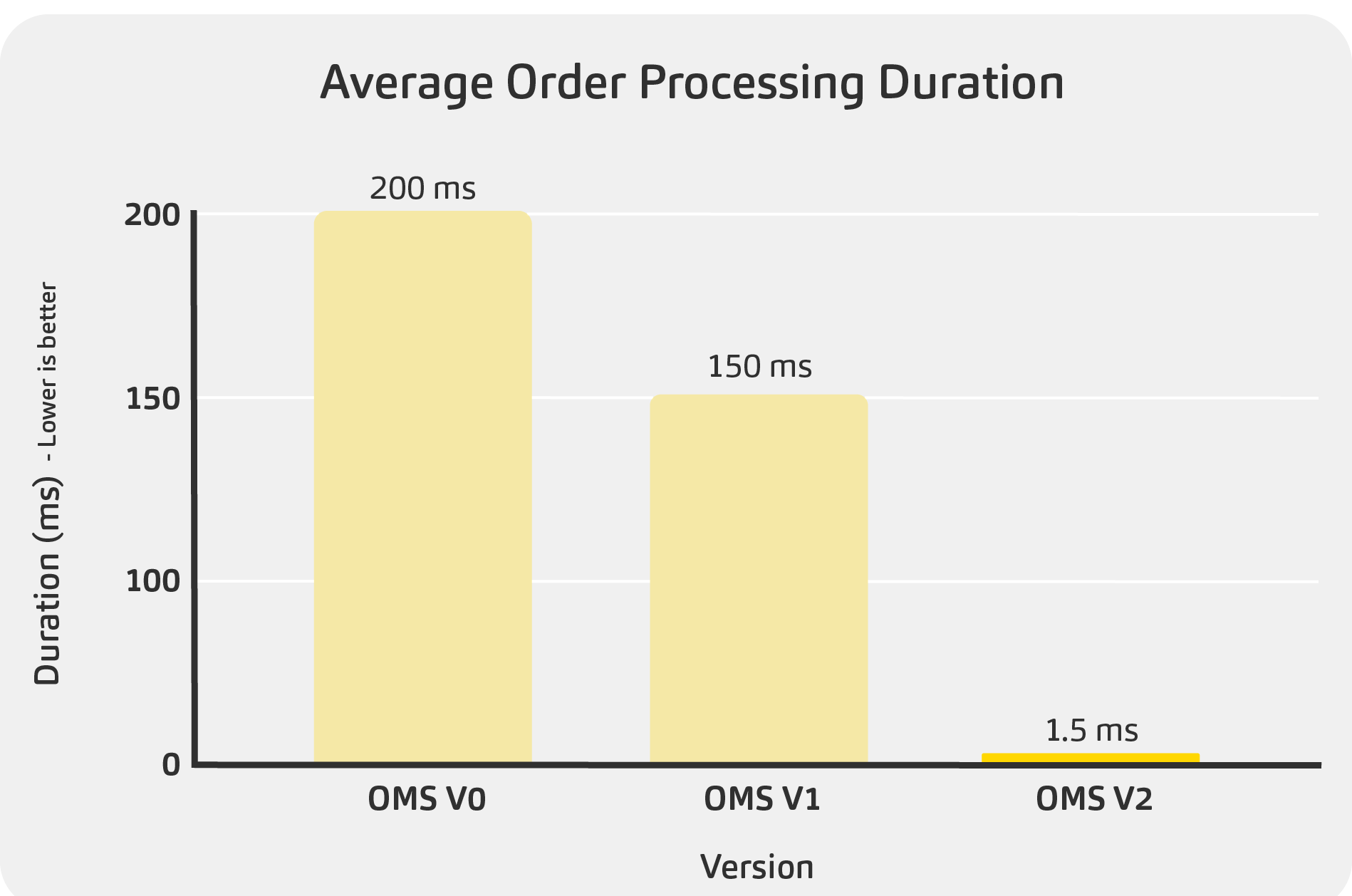

Our new Order Management System (OMS v2) is now being rolled out to all accounts and is two orders of magnitude faster than our previous OMS. Even during extremely heavy trading volumes, OMS v2 has consistent low latency characteristics. Order processing has improved from ~150ms (with a maximum processing time of up to 500ms during heavy volume) to consistently under 1.5ms!

What does an Order Management System (OMS) for Trading do?

At Alpaca, The OMS is the interstitial service between every client order and the market. As the backbone of our trading service, The OMS needs to efficiently process orders which at a high level includes:

- Request Processing and Validation: When an order related request is received, we must ascertain what account it relates to and optionally an existing order and ensure the validity of the order parameters.

- Account State: In order to validate the order, we need to associate the request to an account and evaluate the account’s buying power, current positions, etc. Since each order that is processed would impact the account’s effective buying power and asset positions, order processing is inherently sequential by design.

- Market Venue Routing: Depending on parameters of the order and account, we route the order to the appropriate market venue through rule processing.

- Execution Report Processing: When messages, known as Execution Reports, come back from the Market Venues, we receive FIX messages for order acknowledgement, partial fills, fills, cancels, etc. OMS must parse these execution reports so that the order state reflects what the market has returned.

- Complex Order Triggers: At Alpaca, we support a wide variety of complex order types. Some order types require triggers that OMS must execute based on conditions of the order type.

Since order processing is sequential per account, optimizing order processing time has dramatic benefits to getting the order to market quickly and efficiently. Since OMS v2 is entirely in memory and not dependent on any other systems and services, we eliminated all the overhead of data marshaling and network round trips that our previous OMS had.

A chronological history of Order Management Systems at Alpaca

V0: Order Management as part of our Platform Application

When Alpaca originally launched, Order Management was handled within our platform application like many other endpoints. This served us well initially and was not highly performant since each order request would have to build the account state for every individual order. Average order processing was estimated to be around ~200 ms. On every order request, we would rely on a database lock to behave as an account mutex so that multiple concurrent order requests would process in FIFO sequential order.

V1: Order Management as a discrete singleton service: No database locks

To address some of the performance concerns and eliminate account level database locks, we built a discrete order management system as a new independent service. OMS v1 had an HTTP interface that our platform API could send requests to and the OMS singleton would create a single goroutine (thread) per account. This was much more performant, eliminated the need for database locks, and served us very well, but was a single point of failure and non deterministic performance characteristics at times of heavy load (such as during market open or highly volatile trading periods). Secondly, this version of OMS relied on our primary database for reading and writing account state and each order would require several round trip queries to the database. OMS v1 demonstrated similar performance characteristics to its predecessor; Average order processing was around ~150ms but could be as high as 500ms per order during heavy load.

V2: Horizontally scalable, in-memory Order Management System: 100x faster

With OMS v2, we heavily invested in making our order management system highly scalable and several orders of magnitude faster than our previous version. In order to achieve this, we built a horizontally scalable in-memory order management system. We exceeded our original performance goal of bringing order processing from 100-500ms in v1 to be consistently under 5ms. Based on extensive benchmarking and stress testings and after many iterations of performance engineering, we are now able to process 85% of orders under 1.5ms and 95% of orders under 5ms!

Demo: Processing 1 million fractional orders in less than one minute

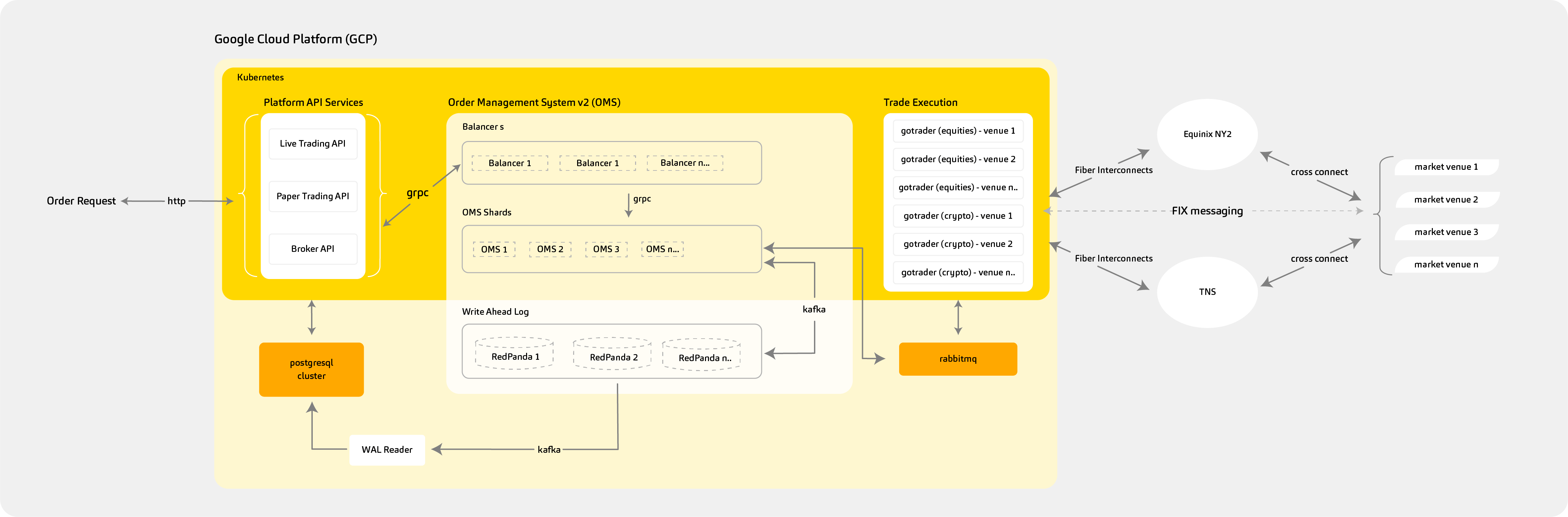

OMS v2 Key Components

OMS nodes with In-Memory State:

At the core of OMS v2 are horizontally scalable OMS nodes that maintain account state entirely in memory. Each OMS is responsible for a particular set of accounts (defined via our sharding strategy). By maintaining all account state in memory, we avoid significant overhead of the round trips required if the account state lived in a traditional RDBMs. We shift the account and position state from a single relational database to a set of distributed nodes each maintaining the state of a subset of accounts.

Distributed Write Ahead Log (WAL)

For durability and recovery, we built a write-ahead-log powered by Redpanda (a Kafka compatible event streaming platform built on top of Seastar). When an OMS instance starts up, it can rebuild state by hydrating account state from a database and then replaying events from Redpanda given the last known partition offsets that the particular OMS node is consuming. This also has the added benefit where other services can listen to trade and order related events by consuming the WAL in an entirely decoupled fashion.

Balancer

We utilize stateless load balancers that are made aware of the OMS nodes via configuration. Our externally facing platform API communicates with OMS v2 via GRPC calls to our OMS balancer pool. The balancers maintain GRPC connections to each healthy OMS node and forward route calls from the API to the appropriate OMS instance.

Next Steps

While we are pleased with the significant improvements and scalability our new OMS affords us, we already have a roadmap for how we can further improve our order processing time and reduce tail call latencies as well as areas with better throughput with our order execution services.

In our next blog post, we will dive deeper into specific architecture decisions for OMS and our future work.

Items of Note and Thank You

We’d like to thank vectorized.io for their development of Redpanda and their guidance in designing our write ahead log. They also introduced us to the creator of franz-go, Travis Bischel, the kafka go client we use within OMS v2. Travis was extremely helpful in providing us with suggestions and adding changes into franz-go to support our use case.

If you are looking at event streaming platforms, we highly recommend looking at Redpanda. Redpanda’s speed and simplified deployment (no need for Zookeeper!) made it a pleasure to work with both during development and in production. Based on our experience, we plan on leveraging it across many of our services.

Contact Us

The testimonials, statements, and opinions presented on the website are applicable to the specific individuals. It is important to note that individual circumstances may vary, and may not be representative of the experience of others. There are no guarantees of future performance or success. The testimonials are voluntarily provided and are not paid, nor were they provided with free products, services or any other benefit in exchange for said statements.

Alpaca Securities LLC and AlpacaDB are not affiliated with Redpanda.

This article is for general informational purposes only and is believed to be accurate and reliable as of posting date but may be subject to change. All images are for illustrative purposes only.

Brokerage services are provided by Alpaca Securities LLC ("Alpaca"), member FINRA/SIPC, a wholly-owned subsidiary of AlpacaDB, Inc.

Technology and services are offered by AlpacaDB, Inc.