In the first part, we've described the need for this project, so in a nutshell - it helps users to execute parallel algorithm using the Alpaca websocket service.

In this post I am going to explain the internal implementation of the project, giving the more curious developer an insight of what is going under the hood.

Let's first talk about the tech stack:

- Docker

- python 3.6

- python packages:

- alpaca-trade-api SDK

- the websockets package

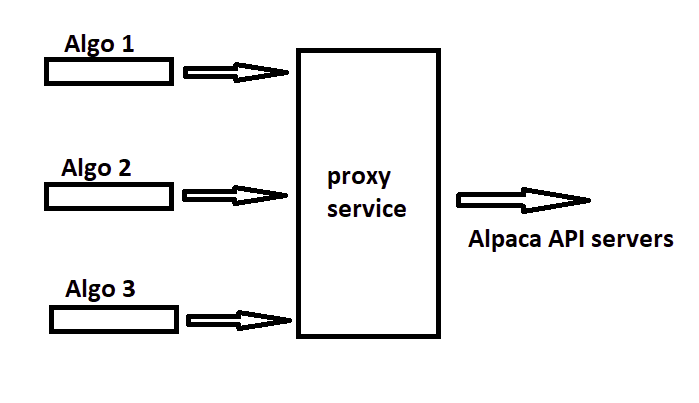

Proxy-Agent Architecture

In its core this project contains a Websocket server and a Websocket client.

The websocket server allows your multiple algorithms to connect to it, and register for specific channels (stock updates, account updates, wild cards, etc..). Each client is stored in a map so when a message is received, it is routed to the right client.

The websocket client connects to the Alpaca API service, and subscribes for all the channels requested by the users' algorithms. when a new user connects, we unsubscribe from the old channels, and subscribes to the new channels (which are the combined channels, the old and the new).

That means that when a new algorithm connects, the other algorithms experience a websocket disconnection. That is not a problem if we do this at the beginning of the trading day (which makes sense) but we must make sure that the clients try to reconnect (which they should do anyway).

Using Python asyncio

The Alpaca python SDK's websocket object, also known as `StreamConn` is based on the websockets python package which is built on top of Python's standard asynchronous I/O framework (asyncio). So this project is built on top of asyncio as well.

Working with asyncio means understanding the eventloop mechanism, and how to work correctly with two threads.

The main effect on this project (and this is a good tip for everyone trying to create a similar project) - The eventloop that creates the websocket needs to handle its messages. That seems trivial, but once I introduced another thread (websocket server, and websocket client right?) I faced some difficulties trying to pass the messages from the API back to the algorithm clients thread. This was solved by using a Queue, passing the information between threads.

Another thing was, how to execute 2 "while" loops on the same event loop. So let's first define the tasks that need to be executed:

- Server listener - waiting for new clients, subscribing to new channels.

- Websockets client - connecting to the API servers, waiting for new messages to arrive.

- Sending the received messages to the right algorithm client.

Of course, there are more things to do, but those are the main ones.

So we run the websocket client on one thread but as I mentioned, the thread that handles the incoming clients, needs to be the one sending them the received messages (same eventloop). so we have here 2 "while" loops:

- one that listens to incoming clients (algorithms)

- one that handles incoming messages( quotes/trades/etc..) and routes them to the right client.

Both should run on the same thread, using the same eventloop.

For that purpose I use an asyncio method called `asyncio.gather()` which allows us to run this async infinite "while" loops on the same thread.

Execution/Debugging

As described in the first part, the recommended way to execute the proxy-agent is by using docker. But, you may want to run it locally in an IDE to debug it, or to enhance it. You can. Just do this:

clone: git clone https://github.com/shlomikushchi/alpaca-proxy-agent.git

cd into the folder

create a virtualenv (recommended)

install it and requirements: pip install -e .

open main.py in the IDE and fire away.Security

The communication between your algorithm and the proxy-agent does not use ssl. The communication between the proxy-agent and the Alpaca server does.

So make sure you do not expose the proxy-agent's port to the outside network (especially if you run on the cloud)

Going Live

This is a new project. It should help users to develop and test their ideas. My personal recommendation is using your paper account and not your live account with it - for now. Let it accumulate some user experience, fixing edge-case bugs before doing that.

Project structure

main.py

* accept new clients

* subscribe to new channels

* send receive messages(quotes/trades/...) back to clientsshared_memory_obj.py

just a python module trick, define objects (dictioneries, queues) in a shared module - they will be created once. you can call it a poor man's Singleton.server_message_handler.py

* handles received messages from the api serversdefs.py

* shared constants and Enumsdocker files

* Dockerfile used to build the production docker image

* Dockerfile-dev used to build a debugging docker image (could be used to run in an IDE)

* docker-compose.yml - docker compose file to execute the production image locally.

* dev.yml - docker compose file used to run the container locally in debug modeAgain the project discussed today can be found in the following GitHub repository.

Follow @AlpacaHQ on Twitter!

Brokerage services are provided by Alpaca Securities LLC ("Alpaca"), memberFINRA/SIPC, a wholly-owned subsidiary of AlpacaDB, Inc. Technology and services are offered by AlpacaDB, Inc.